What Is Deep Learning? | DL Book - Chapter 1: Introduction

The video version of this blog post is available here

I recently decided to refresh my knowledge of deep learning and gain an even deeper understanding of the field.

To that end, I am going to read through the Deep Learning book by Ian Goodfellow et. al.

In this blog series, I am going to write about the key ideas and also implement them in code / use the math learned on some problems to gain intuition about these concepts.

I'll also try to create some tasks, so that you, the reader, can check your understanding of the content.

I aim to write a blog post for each chapter in the book. Larger chapters might have multiple blog posts.

Why read this book?

This book has been recommended by many known personalities in the AI field throughout the years, including Greg Brockmann. Despite being almost a decade old, it is still relevant as it covers the fundamental concepts and maths of deep learning, which haven't changed that much.

Feed-forward neural networks and backpropagation are still the building blocks of newer architectures such as transformers and diffusion models.

Plus it is still getting recommended by people in the field.

Chapter 1: Introduction

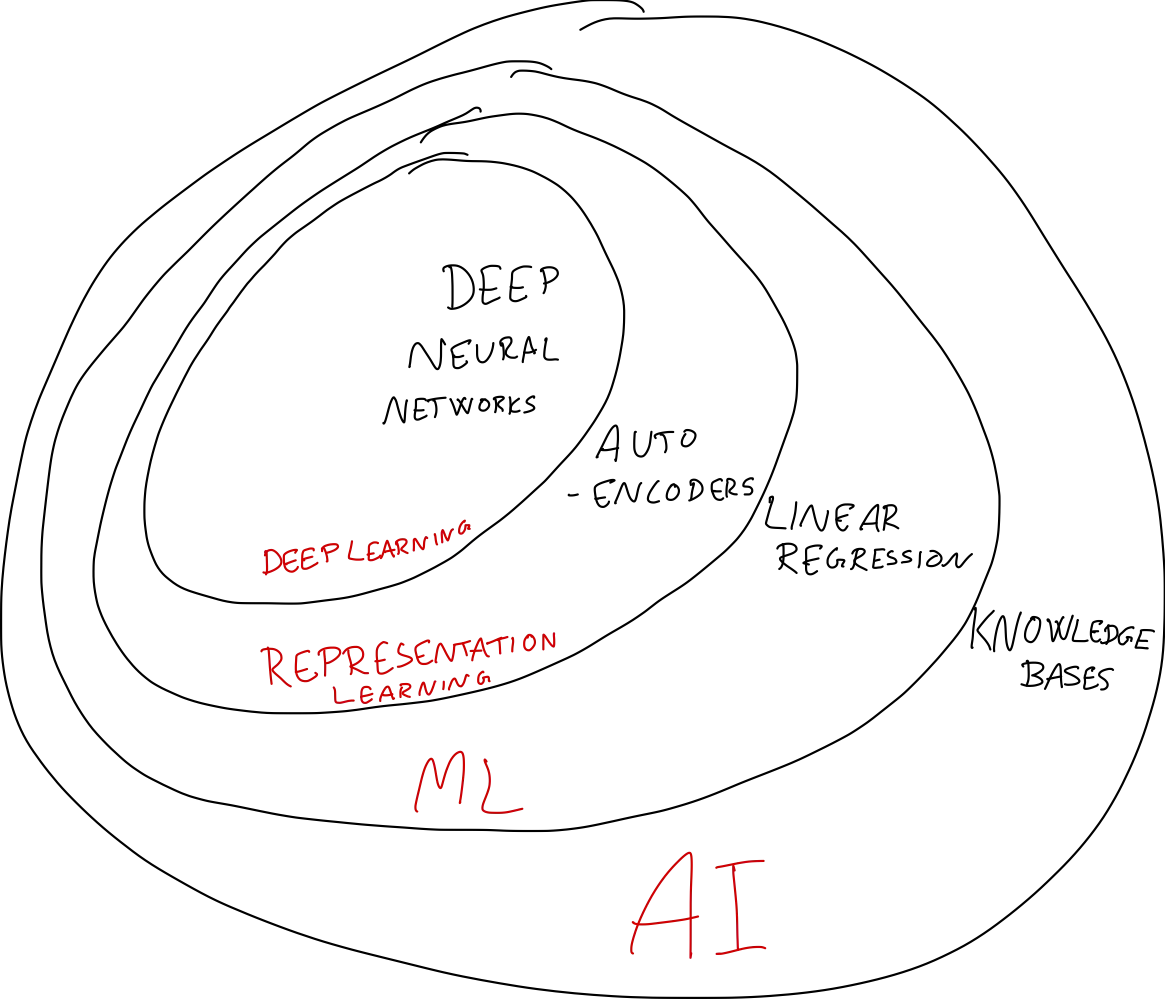

Let's start by looking at how DL (Deep Learning), ML, and AI are related.

AI and ML

AI is a field focused on creating machines able to act/think intelligently. ML is a subset of this field which achieves this goal by extracting patterns from data. Here the representation of the data is very important. Good representations (which are built up of features) can make it easier for the ML algorithms to find patterns. Representations can be handmade, but this can be cumbersome.

Representation Learning

Representation Learning solves this problem by learning to create useful representations from features. A good example of this is the (shallow) autoencoder. Autoencoders take the input, map it to some smaller latent space, and then map it to the output space. The goal is to try and recreate the original input. Therefore the model gets trained to create useful representations of the input in the latent space.

Deep Learning

DL is a form of Representation Learning concerned with learning complex representations. The features of the data can, combined, make up simple representations, which in turn can make up more complex representations. In other words, a hierarchy of concepts is learned and created from simpler concepts.

The best example of a DL model is the multi-layer perceptron (MLP), also known as the feedforward deep neural network (DNN). A deep neural network is simply a neural network with one or more hidden layers (layers other than the input and output layers).

Below is a drawing of how the different fields relate to each other, with some examples:

This figure is based on figure 1.4 in the Deep Learning book by Goodfellow et al.

This figure is based on figure 1.4 in the Deep Learning book by Goodfellow et al.

DL and Neuroscience

This chapter also covers some history of the deep learning field, which I won't cover here. However, I found the part about the connection between deep learning and neuroscience quite interesting. I have always believed the field of AI to be heavily inspired by neuroscience, which it is to a large extent. However, the authors argue that it shouldn't be taken as a guide for the field. For one, we don't know how the brain works exactly. Also, there has sometimes been made improvements by going from complicated biologically inspired approaches to simpler ones. ReLU is a good example of this.